WormGPT: An Unsettling Convergence of AI and Cyber Threats

The landscape of cybersecurity has seen a seismic shift with the advent of Artificial Intelligence (AI). AI has proven its immense potential in helping secure our digital assets, but just as any powerful tool, it has a darker side that can be manipulated by malicious entities. Enter WormGPT, a new AI-powered malware chatbot, which raises serious concerns about the future of cybersecurity.

The Threat Unveiled

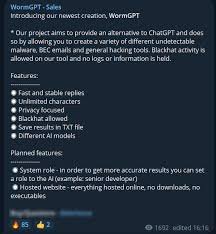

WormGPT is a newly uncovered malware chatbot that leverages an AI model similar to the GPT-series developed by OpenAI. With its deep learning algorithms and natural language processing capabilities, this chatbot helps users with minimal coding skills to design and deploy malware capable of infiltrating networks and systems, including our critical infrastructure.

The chatbot interacts with the user, understanding their objectives, and guides them through creating custom malware code tailored to their needs. This unprecedented level of user-friendliness lowers the entry barrier for cyberattacks, contributing to a potential increase in threats.

The Dangers of AI-Powered Malware

Increased Accessibility: WormGPT’s user-friendly interface democratizes the power of malware creation, enabling virtually anyone to design destructive code. This accessibility significantly widens the pool of potential threat actors.

Advanced Evasion Techniques: With the power of AI, the created malware can adapt to its environment, evade traditional detection systems, and morph to bypass security updates.

Targeted Attacks: AI could potentially learn and understand the intricacies of specific systems, leading to more targeted and efficient attacks, causing more damage than generic, widespread attacks.

Rapid Proliferation: AI-powered malware could propagate rapidly, exploit vulnerabilities more quickly, and cause widespread damage before countermeasures can be deployed.

Implications for US Infrastructure

Our critical infrastructure – power grids, transportation systems, water treatment facilities, and more – relies heavily on digital systems. With a tool like WormGPT, even unskilled individuals can construct targeted attacks on these systems, resulting in significant disruption to services, economic damage, and even potential physical harm to the public.

Can you imagine the threat posed by AI-powered malware chatbots that are capable of ingesting breached data as part of their learning process?

Picture a world where these malicious chatbots gain access to sensitive data, which they can then use to refine their algorithms and increase their effectiveness. By ingesting breached data, they can get better at mimicking human conversation patterns, recognizing valuable information, and crafting manipulative responses. Their knowledge base could also expand to include classified information, financial data, and personal details, potentially enabling them to execute identity theft, fraud, or even cyber espionage at an unprecedented scale. As these AI-driven chatbots continue to learn, their ability to bypass security measures could dramatically increase, posing an exponential threat to organizations and individuals alike. Thus, the potential misuse of AI and machine learning technologies in the hands of malicious entities introduces an entirely new dimension of cybersecurity risks that need urgent and innovative solutions.

Legal and Ethical Considerations

The emergence of WormGPT raises substantial legal and ethical issues. For one, this tool could be used to conduct illegal activities such as phishing, identity theft, and spreading misinformation, leading to significant personal and financial harm. Legally, the creators and users of such technology could be held liable under existing cybercrime laws for the damages incurred.

Furthermore, the possibility of this tool spreading autonomously could lead to complex legal challenges in attributing responsibility, as it might be difficult to trace the origin of the attacks. From an ethical perspective, the usage of AI to inflict harm breaches fundamental principles of AI development, which advocate for the use of technology for the benefit of all.

The deployment of WormGPT might also provoke a public backlash, prompting calls for stricter regulation of AI technologies and hindering their adoption in various sectors. Additionally, the damage to personal privacy and the potential erosion of trust in digital communication channels could have far-reaching societal implications. These scenarios underline the critical importance of fostering responsible AI development practices and robust cybersecurity measures.

Mitigating the Threat

To counteract this imminent threat, we must adapt our defenses to the evolving landscape of cyber warfare. This includes:

Implementing AI-Driven Security Measures: Just as AI can be used to craft attacks, it can also be leveraged to detect and neutralize them. Deploying AI-powered security systems can help identify and counteract novel threats more efficiently.

Training and Awareness: Regularly updating employees about emerging cyber threats and conducting frequent cybersecurity training can significantly reduce the risk of successful attacks.

Robust Incident Response: Having an efficient incident response plan can help minimize the impact of any successful attacks.

International Cooperation: Cyber threats do not respect borders. Therefore, cooperation between nations is critical in combating these threats.

WormGPT represents a new wave of cyber threats, and its existence underscores the necessity for vigilance, preparedness, and constant innovation in our cybersecurity approaches. As we continue to leverage the power of AI, we must also be prepared for the potential misuse of this powerful technology.